I passed my defense today

Posted by Yuling Yao on May 07, 2021.I passed my thesis defense today. The capability of passing the defense per se appeared less exciting than I had imagined, in part because everyone passes the dissertation defense anyway. It is like the p-value in a medical publication: readers have already known it is significant before reading the paper, or the “Result’’ section of a conference paper: readers already know the experiment manifests “our proposed method beats all benchmarks (after some random-seed-hacking)”—an inevitable promise contains its own languishment and gloominess from the very beginning.

[Image of Bhagavad Gita from Hindu Epic, by which Oppenheimer illustrated the idea of self-implied-destruction.]

Graduate school is analogous to an open-ended workflow: we cease the process before the convergence. I have accumulated maybe 10 first/co-first-author papers, but I also have more unfinished papers awaiting to be finished. I built a few methods and they generated ~800 citations, but I also know these methods have limitations and rarely scale to real big models and dataset. I started to establish myself professionally, but I also did not win a Nobel Prize for my thesis, like de Broglie, or got a faculty job in Berkeley or anything, like Andrew Gelman, or dropped school and started a search engine or a social media. I learned some more statistics, perhaps only a little bit more math, a hint of computer science, and some blog writing skills, but I also forgot many things along the way such as differential geometry or functional analysis. I read papers on a frequent basis, but I also have brand new textbooks on my shelf that I bought a few years ago and have not read a single page since. I have taken those classes, while I have never fully understood RKHS, decoupling, or semiparametrics. I have tried from time to time, while I apprehend anything but stochastic PDE, optimal transport, pricing of Asian options, gradient flow, Riemannian manifold, Cheeger’s inequality, or virtually the vast majority of things.

A Cox-type quote here would be

All graduate school experiences are unsound, but some are useful.

which itself brings further debates on whether I need better diagnostics for my usefulness. The hesitation lies in that I cannot rerun the model even if the diagnostics indicate horrible fit. In this sense, gradate school is not even a sophisticated workflow, it is a one-shot pre-asymptotic MCMC sampler: you get what you get. Slightly worse than a Markov chain, human-beings have a longer memory, driving me to ask retrospective questions: If I had seen this local mode that I end up with, should I adopt a different step size five years ago? If I had known that I would not fall in the typical set, should I shift my adaptation direction during warm-up?

Clearly, I would have fewer regrets if I were Markovian, or if I abandoned the potential outcome frameworks.

Anyway, I am glad and grateful for finishing this life-phase. I am pasting my acknowledgement from my thesis here:

Starting by “this thesis would not have been possible without these people” would be cliché and kitsch. Such claim misleadingly defines the causal effect of an unrealistic and irreproducible intervention, although part of this thesis addresses leave-one-out cross validation or influence analysis. That being said, the aid from my advisor, collaborators and friends throughout my graduate study and research is not a latent parameter to estimate; it is input that is palpable and treasurable.

It would be unscalable to exhaustively list all the support I enjoyed from my doctoral advisor Andrew Gelman. Aside from inspirations I have learned from his blackboard and chalks, emails, overleaf editing logs, and those meetings with three hundred topics emerging in the same room, Andrew presents me an example of what a good statistician could be. To the same extent that model fitting benefits from fake data simulation, my trajectory toward an aspiring researcher is boosted by these simulations of fake Andrew in my head—”What would Andrew say to a sloppy graph? What model would Andrew consider given this dataset? What insights would Andrew write down on his pocket notebook after reading these papers?”—Well, even this metaphor is adapted from his.

I am grateful to Aki Vehtari for his guidance and discussions on many, if not all, of my research works. In addition to all bibliographies he meticulously pointed me to, a simulated Aki would also appear in the previous imaginary loop: “What would Aki say to this new idea? Would Aki be happy with this software implementation?”

I would like to thank collaborators with whom I have worked closely: Dan Simpson, Lex van Geen, Bob Carpenter, Yu-Sung Su, Jonah Gabry, Ben Bales, Jonathan Auerbach, Gregor Pirš, Charles Margossian, Collin Cademartori, and others. As a universal statistical principle applies, “the most important thing is what data you work with, not what you do with the data”, whose corollary lower bounds how much I have already learned from stacking all these collaborators.

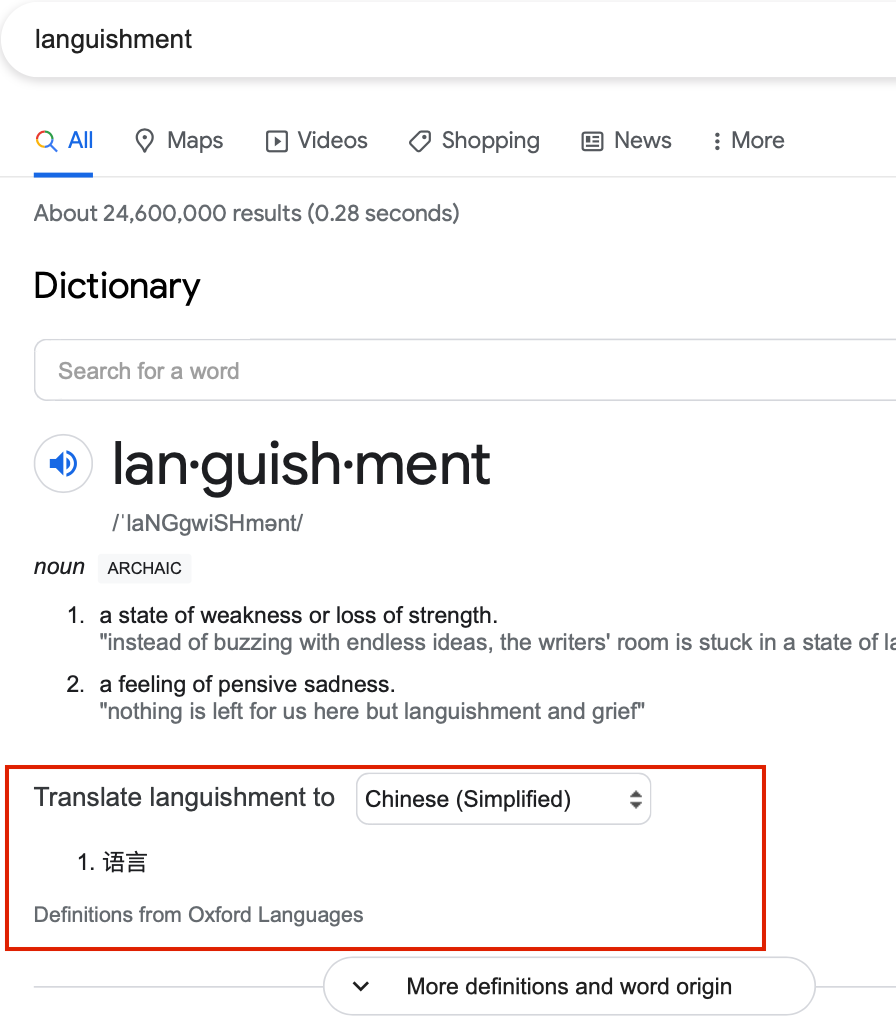

P.S., it is very unrelated, but my text editor did not recognize the word “languishment” when I typed it, so I double checked in google. It appears Google-Translate automatically translates this word into “语言” in Chinese, which means “language”. Hmmm, Bayes classifier is no good.